QwQ-32B Reasoning Model

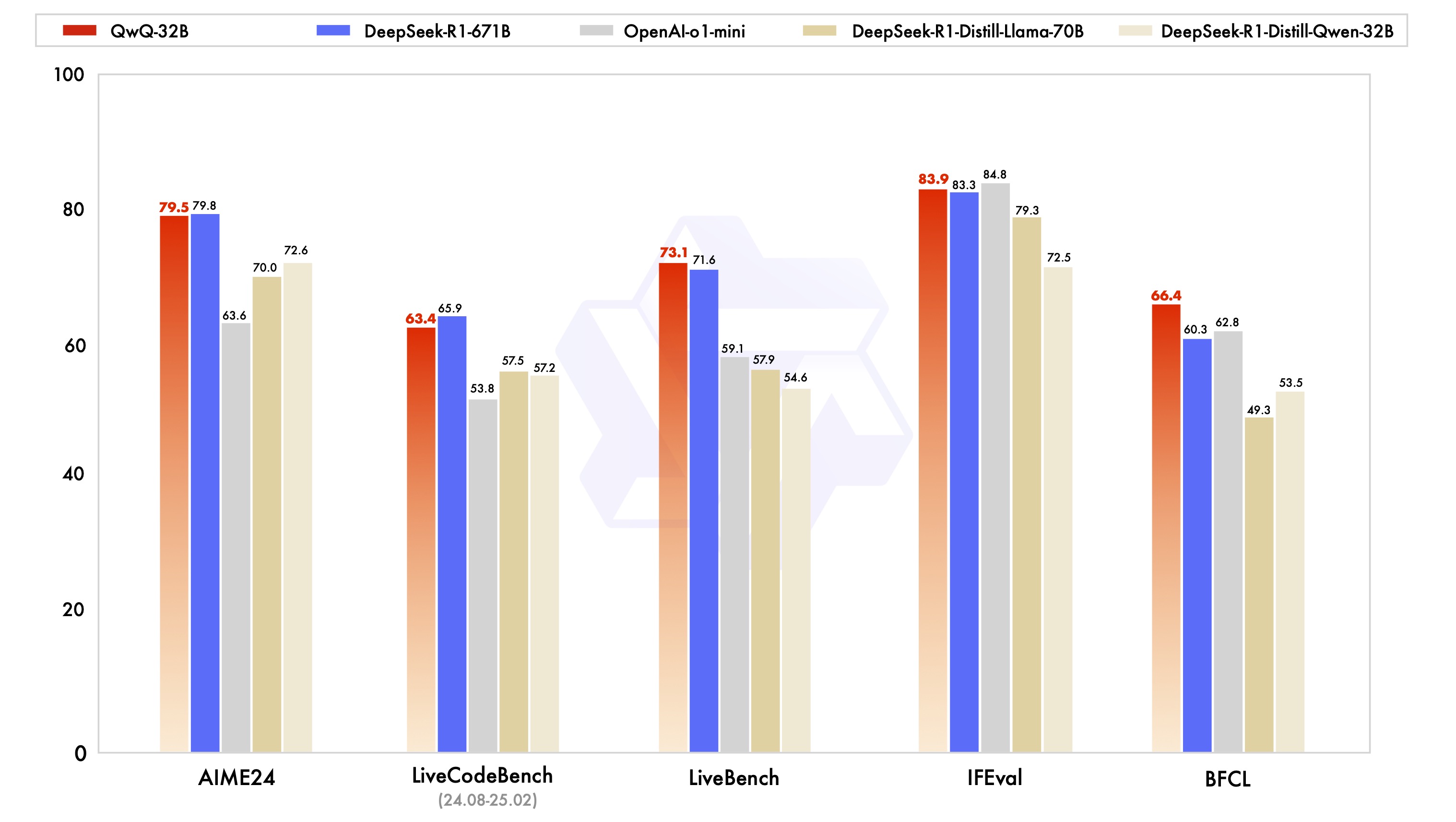

A powerful reasoning model of the Qwen series, capable of thinking and reasoning with significantly enhanced performance in downstream tasks, especially hard problems. QwQ-32B achieves competitive performance against state-of-the-art reasoning models.

Key Features:

- Advanced Architecture with RoPE, SwiGLU, RMSNorm

- 32.5B Parameters (31.0B Non-Embedding)

- Long Context Length of 131,072 tokens

- State-of-the-art reasoning capabilities

Experience QwQ-32B

Try our model through HuggingFace Spaces or QwenChat - no installation required.

Features

What makes QwQ-32B special

QwQ-32B is a medium-sized reasoning model that combines powerful thinking capabilities with state-of-the-art performance.

Advanced Architecture

Built with transformers featuring RoPE, SwiGLU, RMSNorm, and Attention QKV bias

Sophisticated Structure

64 layers with 40 attention heads for Q and 8 for KV (GQA)

Extended Context Length

Supports full 131,072 tokens context length for comprehensive analysis

Large Parameter Scale

32.5B total parameters with 31.0B non-embedding parameters for deep reasoning

Enhanced Reasoning

Significantly improved performance on downstream tasks and hard problems

Easy Deployment

Supports various deployment options including vLLM for optimal performance

What People Are Saying

Community feedback on QwQ-32B

Today, we release QwQ-32B, our new reasoning model with only 32 billion parameters that rivals cutting-edge reasoning model, e.g., DeepSeek-R1.

— Qwen (@Alibaba_Qwen) March 5, 2025

Blog: https://t.co/zCgACNdodj

HF: https://t.co/pfjZygOiyQ

ModelScope: https://t.co/hcfOD8wSLa

Demo: https://t.co/DxWPzAg6g8

Qwen Chat:… pic.twitter.com/kfvbNgNucW

The new QwQ-32B by Alibaba scores 59% on GPQA Diamond for scientific reasoning and 86% on AIME 2024 for math. It excels in math but lags in scientific reasoning compared to top models. pic.twitter.com/MLcKsumk4n

— xNomad (@xNomadAI) March 7, 2025

the new QwQ 32B model is insanely fast…

— David Ondrej (@DavidOndrej1) March 6, 2025

imagine once this is added to Infinite Thinking 😮💨 pic.twitter.com/z0kftcV8zW

الصين 🇨🇳 ما زالت تتحدى

— سعيد الكلباني (@smalkalbani) March 5, 2025

أطلقت Alibaba نموذج صغير QwQ-32B ولكنه يتفوق على DeepSeek الذي يفوقه في الحجم بحوالي 20 مرة.

QwQ-32B يتفوق على جميع النماذج مفتوحة المصدر ولكنه يتخلف بفارق بسيط عن o1

بالامكان استخدامه من هنا https://t.co/ySOcPP3uFb pic.twitter.com/Y3M5kjjr4v

QwQ-32B changed local AI coding forever 🤯

— Victor M (@victormustar) March 7, 2025

We now have SOTA performance at home. Sharing my stack + tips ⬇️ pic.twitter.com/dL3pkCfdm5

QwQ-32B 的 KCORES 大模型竞技场测试结果发布!

— karminski-牙医 (@karminski3) March 6, 2025

Qwen-QwQ-32B-BF16 目前测试得分为 278.9 分(图1),在榜单中超过了 DeepSeek-V3, 距离DeepSeek-R1 还比较远。

但是!距离线上的 Qwen-2.5-Max-Thinking-QwQ-Preview 仅差 0.2 分!这意味着千问这次开源的的确就是线上水平的版本!(性能类似… pic.twitter.com/ifNGKt312a

今週のAI界隈、激動すぎる...

— すぐる | ChatGPTガチ勢 𝕏 (@SuguruKun_ai) March 10, 2025

・Windsurf Previewsでアプリ開発が進化

・ChatGPTがIDEコード編集に対応

・Sesameが超リアルなAI音声を実現

・Alibaba QwQ-32Bが小型で高性能な推論

・Mistral OCRがドキュメント理解API発表

・Deepseekの再来・AIエージェントmanus

詳細スレッドで解説します👇🧵

【DeepSeek超え】中国からまた半端ないAIモデルが登場

— チャエン | デジライズ CEO《重要AIニュースを毎日最速で発信⚡️》 (@masahirochaen) March 6, 2025

アリババのAIモデル「QwQ-32B」が公開。

なんとパラメーター32BでDeepSeek R1 671Bを超えの精度。実はQWENのチャットは無料で深い思考、ウェブ検索、Artifacts、動画・画像生成が可能で実は最強。こんな高機能で無料は唯一無二。⇩リンク pic.twitter.com/kbDCbQgzlT